Interview

11 min read

Last updated:

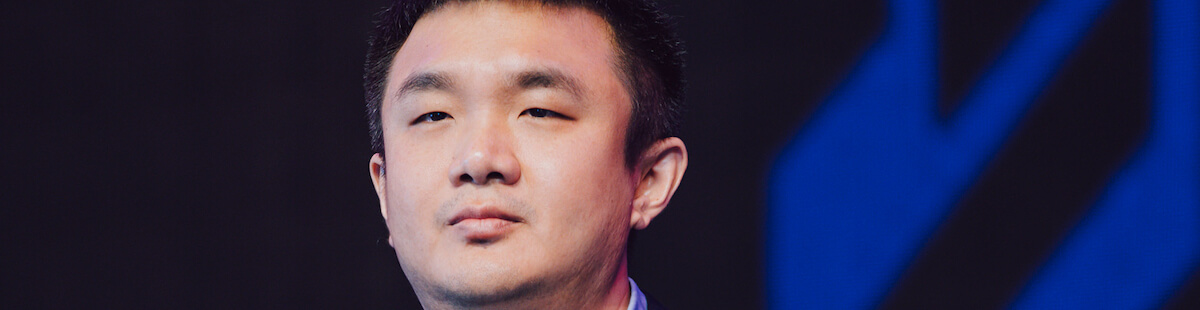

For our latest expert interview on our blog, we’ve welcomed Dhonam Pemba to share his thoughts on the topic of artificial intelligence (AI) and his journey behind founding KidX AI.

Dhonam is a neural engineer by PhD, a former rocket scientist and a serial AI entrepreneur with one exit.

He was CTO of the exited company, Kadho which was acquired by Roybi for its Voice AI technology. At Kadho Sports he was their Chief Scientist which had clients in MLB, USA Volleyball, NFL, NHL, NBA, and NCAA.

His latest company, KidX, is in the AI edtech space, where he has built NLP and Voice assessment to serve China's leading robotics company with 4M users.

Tell us about the business you represent, what is their vision & goals?

Our company is called KidX AI, the X stands for interaction and its vision is to be the next generation of children interactions powered by AI. AI and technology will be part of our lives, and we want to build the safest, most beneficial experience for children.

We are starting with early educational toys because that is where most children get their first experience with technology, and by using passive screens they are missing out in a big way. The first few years of life are also the most important for a child’s brain development, and the adoption of technology for young children could be detrimental if not done correctly.

Our goal is to reimagine the offline learning and play experience for children by remixing physical play with AI and state of art technologies. We believe in combining digital and physical play to maximize a child’s development, enjoyment, curiosity, and learning.

Can you tell us more about your educational background?

My background is in Biomedical Engineering, where I got my bachelor’s from Johns Hopkins University. Biomedical Engineering is a diverse education as you are learning both the life sciences and engineering, usually, most BME’s get a minor as well because of this, for me my minor was Math and my concentration was in Computer Engineering.

I continued the same degree at the University of California, Irvine for my PhD, but the concentration shifted towards Microelectromechanical systems (MEMS). Here the intersection between engineering and life sciences was with neural implants. I was building neural implants that could help restore lost sensory functions such as hearing or motor movement.

Since we were interfacing with the nerves directly the electrodes needed to be on the microscale. My rocket science and AI work came later through actual experience rather than academic education.

Can you share a little bit about yourself and how you got into the field of artificial intelligence?

Throughout my academic career, I’ve taken programming courses and interacted with real neural networks, but the new paradigm of AI that we see now that uses Deep Learning was learned post academy.

I started my first edtech company that developed language teaching apps for kids. For learning language conversation and judging, pronunciation is critical, and this is where we turned to AI. One of the biggest challenges that we faced was that not many commercial speech recognition solutions could understand children’s voices well, and also parents cared about privacy and did not like to have their children’s voices sent to third party solutions.

At this time, AI was not as adopted as it is now, and development was very academic without plug-and-play tools as they have now. Our team was made up of myself and app developers, we had to learn, research, experiment, and produce a commercial children’s voice solution that worked offline.

How did you get involved with TED-Ed Animations?

I originally reached out to them to cover my edtech company in hopes it would lead to downloads of our apps, but they were more interested in the work I did with JPL.

I had a project with NASA’s Jet Propulsion Labs that was very similar to my PhD thesis but with a different application. For my PhD, I was building microneedles for the body, while for JPL the microneedles served as ion thrusters for space.

The project was a proof of concept for Field Emission Electric Propulsion. The idea was to prove that micro-scale needle-shaped cones could propel ions. If we had indium at the base of the cone, and we melt it while attracting it with an electric grid, then the liquid could rise to the tip of the and propel ions out. I worked with a writer and animator at TED-Ed to create the video titled “Will future spacecraft fit in our pockets?”.

What does your day to day responsibilities look like at your organisation?

As the founder of a startup, the day to day responsibilities vary a lot, I can provide better, more meaningful examples for readers of a day to day responsibilities when I have my AI manager hat on.

At any company, the use of AI is to help deliver a solution or experience of value to either a user or another business, which means it must go beyond experimental proof of concept demos that are usually done in labs for research papers. Producing a valuable product means responsibilities throughout the product cycle from research to development to testing to user experience.

Ultimately, the goal is to deliver a successful product to either a customer or a client, which means I am responsible for understanding the requirements and translating them to internal deliverables.

Regarding day to day responsibility, my role becomes more about checking in on everyone’s progress, making sure any obstacles in our progress are communicated, and then innovating. It’s my responsibility to stay up to date with state of the art technology and think about what’s next for us.

Because of my academic background, I would usually experiment and research new methods while managing the overall development. For example, if the NLP engineers are training and updating our current models for a chatbot, I would experiment with new approaches and models, and when I find something promising hand it over to the team to dig deeper.

Although our company has different individuals dedicated to specific specialities such as data labelling, testing, for my own experiments I would still have to do them all and most AI engineers will too. While experimenting best models, you’ll have to do your own data preparation, testing, and deployment.

What are the key differences between computer science, machine learning, and AI?

The main difference between computer science, machine learning, and AI is the amount of human coding required to get the machine to act like a human. If you want to build a chatbot with computer science, it would require a lot of planning, algorithms, and code.

If you wanted to build a chatbot with machine learning, you would need less code but still planning, think of machine learning as a task you can do in 2 seconds for example differentiate between male/female happy/sad, the results from machine learning are just results but you would need to connect them to give an experience.

For example, a machine learning classifier could identify the intent of someone’s speech but then it would need to connect to another machine learning model to pick out the correct response.

In both, computer science and machine learning approaches, the developer would need to account for all the various situations that can give the system common sense reasoning. AI’s goal is to be an end to end solution without any programming, just give it enough data and it can replicate a human, in our case a chatbot, could just be fed lots of conversation logs and then be able to chat with a human using common sense reasoning.

What are some misconceptions that you believe the average person has about AI?

I think the biggest misconception is the current state of AI, people misconceive how far we are and what is achievable. AI is still in the machine learning state, most AI experiences we perceive are just machine learning models powered by deep learning with code wrapped around it to give the user a better experience.

What advice would you give to someone wishing to start their career in artificial intelligence?

Just start is the best way, find something interesting you like and see how AI is used in that topic, then go to GitHub, and try out some open source projects.

__What are the most exciting new developments in the AI Edtech industry right now? __

I am a little biased because I am building an edtech product, and I think the technologies we are using are exciting. For edtech beyond personalized learning adaptive tutoring, the new developments revolve around bridging that gap between online and offline learning, whether that is through interactive videos, augmented reality, better environmental sensing. I’ll give you an example with our product KidX Classbox, we have a board that can sense physical toys, which interact with an app that contains interactive videos.

So a child can receive AI-based lessons from a virtual video bases teacher, and that teacher can respond to the child’s physical interactions in the real world. Right now most of the AI in our product is used in adaptive learning, which is not a new development, AI for personalized learning and recommendation systems is previous generation AI.

What excites me is the improvements in object detection that we are trying to do to create an immersive experience, currently, we detect the objects through NFC, which provides location and identify using standard code, however, AI has the ability to go beyond and detect the objects with extra dimensions such as speed of movement and orientation.

Some of our competitors use computer vision to detect the objects that children play within the real world, but it is still an older generation version and it's limited by the unnatural nature of the objects being needed to be seen by the camera i.e hold the object in front of the camera at a specific orientation.

If these companies can improve the AI vision models and have children freely use move the objects naturally in front of the camera for their apps to detect that would be a tremendous improvement in merging online with offline play.

Would you like to share any artificial intelligence forecasts or predictions of your own with our readers?

I think we are going to see more Blockchain technology integrated with AI. In 2017 and 2018, we saw Blockchain become hot due to the ICO bubbles, then most of the projects didn’t really deliver, but there has been a lot of improvement and the public is starting to embrace decentralized finance.

Money will drive the first innovations in Blockchain and adoption, but then other applications do come out, like Areweave as decentralized storage, Theta as decentralized video streaming, Terra as decentralized payment. NFT’s have become a boom because they provide copyright ownership of a digital asset on a public ledger, one of the highest-selling NFT collections was 10,000 digital unique pieces made through a program.

I think we will see clever innovators find ways to integrate AI and blockchain in the coming few years.

What is your experience of using AI-backed data analysis or log management tools? What do you think is the benefit of using a log management tool that has machine learning capabilities for an organisation?

This goes again with misconceptions of AI and its state. We had several customers in the AI analysis domain and they all believed AI can just go through the data and provide feedback. We had to give them a realistic expectation of what can be achieved and what is needed.

For example, one of our customers wanted their conversation with sales agents analyzed by AI to provide insight into the data. We had to let them know, first we needed to train a speech recognition model to accurately convert their conversations to text, then needed to know what type of data they want to analyze, such as keywords that trigger a sale or refusal by a client.

So in short Ai and machine learning is very helpful to analyze data, but it still needs a lot of work to be done, it's best to think of what work can a human person do with the data, have a human analyze the data and produce the analysis first on a small sample, then repeat it, once you have a formula for a human and the steps, then you can train machine learning models to replace the “2-second decision” steps by humans, such as sentiment classification, keyword spotting, then use human logic to program what to do with the results, and finally produce an analysis report.

Are there any books, blogs, or any other resources that you highly recommend on the subject of AI?

Andrew Ng is a fabulous teacher, I would recommend all his content from Coursera to his book.

If you enjoyed this article then why not find out about conversational AI in our interview with James Kaplan or read our Interview with Dan Izydorek next?