Local Logstash Configuration

Ship logs to your hosted Logstash instance at Logit

Follow the steps below to send your observability data to Logit.io

Logs

All Logit.io ELK Stacks include highly available hosted Logstash instances, removing the need for installing and maintaining your own Logstash server. Logit.io recommends using Filebeat to ship your logs and metrics to your hosted Logstash instance on Logit.io, you then benefit from predefined filters that can be customised from your dashboard.

Install Integration

Before you begin

Logit.io recommends using Filebeat to ship logs and metrics to your hosted Logstash instance on Logit.io you then benefit from high availability and predefined Logstash pipelines.

We understand that some customers might have a specific requirement to use Logstash locally to ship logs and so provide steps below to configure this integration.

Logstash to Logstash

One option for how to send your data from your local Logstash to your Logit.io ELK stack is to send it via your hosted Logstash instance. To do this you can configure the output on your local Logstash to utilise the tcp-ssl port of your hosted Logstash. Configure the local Logstash output to ship your data to the hosted Logstash as shown below, the data you're sending will need to be valid json content.

output {

tcp {

codec => json_lines

host => "@logstash.host"

port => @logstash.sslPort

ssl_enable => true

}

}Sending directly to Elasticsearch

Another option for how to send data from your local Logstash instance is to send it directly to Elasticsearch. In order to do this you will need your Stack in Basic Authentication mode. To enable this choose Stack Settings > Elasticsearch and switch authentication mode to basic authentication. Once you have done this edit the output on your local Logstash to look like the below.

output {

elasticsearch {

hosts => ["@elasticsearch.endpointAddress:443"]

user => "@elasticsearch.username"

password => "@elasticsearch.password"

manage_template => false

index => "%{[@metadata][index]}-%{+YYYY.MM.dd}"

}

}While not required it may be worthwhile adding the following filter before the output. This will add metadeta to your logs so it gives the index name the following format logstash-YYYY.MM.DD

filter {

if ! [@metadata][beat] {

mutate { add_field => { "[@metadata][index]" => "logstash" } }

}

else {

mutate { add_field => { "[@metadata][index]" => "%{[@metadata][beat]}" }}

}

}Launch OpenSearch Dashboards to View Your Data

Launch OpenSearch DashboardsHow to diagnose no data in Stack

If you don't see data appearing in your stack after following this integration, take a look at the troubleshooting guide for steps to diagnose and resolve the problem or contact our support team and we'll be happy to assist.

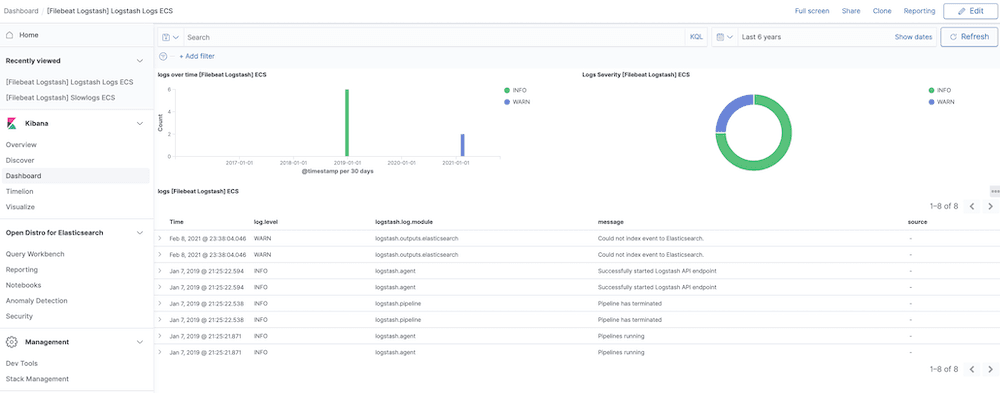

Logstash dashboard

The Logstash module comes with predefined Kibana dashboards. To view your dashboards for any of your Logit.io stacks, launch Logs and choose Dashboards.

Logstash Overview

What Is Logstash?

Logstash is an open-source light-weight processing pipeline created by Elastic. It is the most popular data pipeline used for Elasticsearch as their close integration allows for powerful log processing capabilities.

What is Logstash Used For?

Logstash makes up an essential part of the ELK Stack as it provides the easiest solution for collecting, parsing, and storing logs for analysis & monitoring.

Logstash's wide range of over 200 open-source plugins help you easily index your data across multiple log types including error, event & web server files from hundreds of popular integrations. The tool offers an array of input, output, & filter plugins for enriching, and transforming data from a variety of sources including Golang, Google Cloud, & Azure.

Who can benefit from using Logstash and how can they get started with it?

Logstash offers benefits to a diverse range of users seeking to centralize, transform, and store data from multiple sources. Both beginners and experienced individuals in data processing can benefit from Logstash. Beginners can leverage tutorial videos to easily set up and start using Logstash. Those looking to enhance their skills can explore becoming an Elastic Certified Engineer, opening up more opportunities for proficiency in Elasticsearch. Essentially, Logstash provides a simplified approach to working with data processing pipelines, making it valuable and accessible to anyone working in this domain.

How does Logstash provide management and orchestration capabilities for deployments?

Logstash provides robust management and orchestration capabilities for deployments through its Pipeline Management UI. Users can easily take control of their Logstash pipelines, making the process of orchestrating and managing pipelines straightforward. The interface seamlessly integrates with built-in security features to safeguard against unintended changes, ensuring that deployments remain secure and controlled throughout their lifecycle.

How can users monitor and gain visibility into Logstash deployments?

Users can monitor and gain visibility into Logstash deployments by leveraging the monitoring and pipeline viewer features available. These features provide users with the ability to easily observe and study an active Logstash node or full deployment. Monitoring the pipelines is crucial as they are often multipurpose and can become sophisticated, requiring a deep understanding of performance, availability, and potential bottlenecks. By utilizing these tools, users can effectively monitor their Logstash environment, identify performance issues, track availability, and pinpoint any bottlenecks that may impact the overall system efficiency. This visibility enables users to proactively manage and optimize their Logstash deployments for optimal performance and reliability.

How does Logstash ensure durability and security of data pipelines?

Logstash ensures durability and security of data pipelines through several key features. Firstly, it provides at-least-once delivery for in-flight events by utilizing a persistent queue. This means that even if a processing error occurs or a failure happens, the events can be replayed from the queue, ensuring data integrity. In case an event cannot be successfully processed, Logstash allows for shunting it to a dead letter queue for investigation and potential replay, thus providing a fail-safe mechanism.

Moreover, Logstash eliminates the need for an external queueing layer to handle ingestion spikes efficiently, as it is designed to scale seamlessly. This not only simplifies the architecture but also improves performance during high-demand scenarios. Additionally, Logstash offers features to fully secure the ingest pipelines, ensuring that data remains protected during processing. By enabling users to implement robust security mechanisms, such as encryption and access control, Logstash helps safeguard the integrity and confidentiality of the data flowing through the pipelines.

How extensible is Logstash in terms of creating and configuring pipelines?

Logstash offers a highly extensible framework with a wide selection of over 200 plugins, enabling users to create customized pipelines tailored to their specific needs. The pluggable nature allows for seamless integration of various inputs, filters, and outputs to build cohesive pipelines that match unique requirements. Additionally, if a user needs custom functionality that is not provided by the existing plugins, Logstash provides a straightforward process for building new plugins. With a well-documented API for plugin development and a user-friendly plugin generator tool, users can easily create, share, and extend the capabilities of Logstash to match their pipeline requirements effectively.

What Are The Advantages Of Using Logstash?

Some of the advantages of using this pipeline include high availability & flexibility, due to the wide adoption of ELK & the large community supporting their regularly maintained plugins. Some of the most popular plugins include Logtrailing (for tailing live events), Beats (including Metricbeat & Heartbeat), & TCP.

Logstash also benefits from having a straightforward configuration format that reduces the complexity of getting started with ELK. Data ingestion using Logstash is enriched prior to being indexed by Elasticsearch, making it readily available for filtering, analysis & reporting in Kibana once data is migrated to your ELK Stack