Nginx

Ship your Nginx Metrics via Telegraf to your Logit.io Stack

Nginx is a powerful and widely-used web server and reverse proxy solution, critical for managing high-performance, scalable web applications.

This guide walks you through the process of integrating Filebeat and Telegraf with Nginx to collect and ship both logs and metrics to your Logit.io stack.

Logs

Configure Filebeat to send NGINX logs to Logstash or Elasticsearch.

Install Integration

Install Filebeat

To get started you will need to install filebeat. To do this you have two main options:

- Choose the filebeat (opens in a new tab) ZIP file (Windows ZIP x86_64) or

- Choose the Microsoft Software Installer MSI (opens in a new tab) file (Windows MSI x86_64 (beta))

To successfully install filebeat and set up the required Windows service you will need to have administrator access.

If you have chosen to download the zip file:

- Extract the contents of the zip file into C:\Program Files.

- Rename the extracted folder to filebeat

- Open a PowerShell prompt as an Administrator (right-click the PowerShell icon and select Run As Administrator).

- From the PowerShell prompt, run the following commands to install filebeat as a Windows service:

cd 'C:\Program Files\filebeat'.\install-service-filebeat.ps1If script execution is disabled on your system, you need to set the execution policy for the current session to allow the script to run. For example:

PowerShell.exe -ExecutionPolicy UnRestricted -File .\install-service-filebeat.ps1For more information about Powershell execution policies see here (opens in a new tab).

If you have chosen to download the filebeat.msi file:

- double-click on it and the relevant files will be downloaded.

At the end of the installation process you'll be given the option to open the folder where filebeat has been installed.

- Open a PowerShell prompt as an Administrator (right-click the PowerShell icon and select Run As Administrator).

- From the PowerShell prompt, change directory to the location where filebeat was installed and run the following command to install filebeat as a Windows service:

.\install-service-filebeat.ps1If script execution is disabled on your system, you need to set the execution policy for the current session to allow the script to run. For example:

PowerShell.exe -ExecutionPolicy UnRestricted -File .\install-service-filebeat.ps1For more information about Powershell execution policies see here (opens in a new tab).

The default configuration file is located at:

C:\Program Files\filebeat\filebeat.yml

Enable the NGINX module

You will need to enable the nginx module:

.\filebeat.exe modules list

.\filebeat.exe modules enable nginxYou will see a folder called modules.d, open this folder and you will see a list of yaml files.

These yaml files are called filesets and they are all disabled by default.

Open the nginx.yml file with your prefered text editor and copy the snippet below, replace

the contents of the nginx.yml module file and then save:

# Module: nginx

# Docs: https://www.elastic.co/guide/en/beats/filebeat/8.17/filebeat-module-nginx.html

- module: nginx

# Access logs

access:

enabled: true

# Set var.paths for the log files. If left empty,

# Filebeat will choose the paths depending on your OS.

var.paths:

- 'C:\nginx\logs\access.log'

# Error logs

error:

enabled: true

# Set var.paths for the error log files. If left empty,

# Filebeat will choose the paths depending on your OS.

var.paths:

- 'C:\nginx\logs\error.log'

# Ingress-nginx controller logs. This is disabled by default. It could be used in Kubernetes environments to parse ingress-nginx logs

ingress_controller:

enabled: false

# Set var.paths for the log files. If left empty,

# Filebeat will choose the paths depending on your OS.

#var.paths:Note: In the snippet above we assume that Nginx is located at C:\nginx\, if you have Nginx located elsewhere you will need to update the file accordingly.

Update your configuration file

The configuration file below is pre-configured to send data to your Logit.io Stack via Logstash.

Copy the configuration file below and overwrite the contents of filebeat.yml.

# ============================== Filebeat modules ==============================

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

#reload.period: 10s

# ================================== Outputs ===================================

# ------------------------------ Logstash Output -------------------------------

output.logstash:

hosts: ["@logstash.host:@logstash.sslPort"]

loadbalance: true

ssl.enabled: true

# ================================= Processors =================================

processors:

- add_host_metadata:

when.not.contains.tags: forwarded

- add_cloud_metadata: ~

- add_docker_metadata: ~

- add_kubernetes_metadata: ~If you're running Filebeat 7 add this code block to the end. Otherwise, you can leave it out.

# ... For Filebeat 7 only ...

filebeat.registry.path: /var/lib/filebeatIf you're running Filebeat 6 add this code block to the end. Otherwise, you can leave it out.

# ... For Filebeat 6 only ...

registry_file: /var/lib/filebeat/registryValidate your YAML

It's a good idea to run the configuration file through a YAML validator to rule out indentation errors, clean up extra characters, and check if your YAML file is valid. Yamllint.com (opens in a new tab) is a great choice.

Validate configuration

.\@beatname.exe test config -c @beatname.ymlIf the yml file is invalid, @beatname will print a description of the error. For example, if the

output.logstash section was missing, @beatname would print no outputs are defined, please define one under the output section

Start Filebeat

To start Filebeat, run in Powershell:

Start-Service filebeatLaunch OpenSearch Dashboards to View Your Data

Launch OpenSearch DashboardsHow to diagnose no data in Stack

If you don't see data appearing in your stack after following this integration, take a look at the troubleshooting guide for steps to diagnose and resolve the problem or contact our support team and we'll be happy to assist.

Metrics

Configure Telegraf to ship Nginx metrics to your a Logit.io metrics stack.

Install Integration

Configure Nginx

Update the NGINX configuration file (nginx.conf) to enable the stub_status module:

The location of the Nginx configuration file (nginx.conf) depends on your operating system and how Nginx was installed. Below are common locations for the file:

Common Location:

<Nginx installation directory>\conf\nginx.conf

Open the nginx.conf file with your prefered text editor, find the server section. Replace this section with the code snippet below and then save:

server {

listen 127.0.0.1:80;

server_name 127.0.0.1;

location /server_status {

stub_status on;

allow <Your localhost address>;

deny all;

}

} Replace <Your localhost address> with your localhost address and restart Nginx.

Install Telegraf

This integration allows you to configure a Telegraf agent to send your metrics to Logit.io.

Choose the installation method for your operating system:

When you paste the command below into Powershell it will download the Telegraf zip file.

Once that is complete, press Enter again and the zip file will be extracted into C:\Program Files\InfluxData\telegraf\telegraf-1.34.1.

wget https://dl.influxdata.com/telegraf/releases/telegraf-1.34.1_windows_amd64.zip -UseBasicParsing -OutFile telegraf-1.34.1_windows_amd64.zip

Expand-Archive .\telegraf-1.34.1_windows_amd64.zip -DestinationPath 'C:\Program Files\InfluxData\telegraf'or in Powershell 7 use:

# Download the Telegraf ZIP file

Invoke-WebRequest -Uri "https://dl.influxdata.com/telegraf/releases/telegraf-1.34.1_windows_amd64.zip" `

-OutFile "telegraf-1.34.1_windows_amd64.zip" `

-UseBasicParsing

# Extract the contents of the ZIP file

Expand-Archive -Path ".\telegraf-1.34.1_windows_amd64.zip" `

-DestinationPath "C:\Program Files\InfluxData\telegraf"The default configuration file is location at:

C:\Program Files\InfluxData\telegraf\telegraf.conf

Configure Telegraf

The configuration file below is pre-configured to scrape the system metrics from your hosts, add the following code to the configuration file telegraf.conf from the previous step.

### Read Nginx's basic status information (ngx_http_stub_status_module)

[[inputs.nginx]]

## An array of Nginx stub_status URI to gather stats.

urls = ["http://localhost/server_status"]

## Optional TLS Config

# tls_ca = "/etc/telegraf/ca.pem"

# tls_cert = "/etc/telegraf/cert.pem"

# tls_key = "/etc/telegraf/key.pem"

## Use TLS but skip chain & host verification

# insecure_skip_verify = false

## HTTP response timeout (default: 5s)

response_timeout = "5s"

[[inputs.tail]]

name_override = "nginxlog"

files = ["/var/log/nginx/access.log"]

from_beginning = true

pipe = false

data_format = "grok"

grok_patterns = ["%{COMBINED_LOG_FORMAT}"]

### System metrics

[[inputs.disk]]

[[inputs.net]]

[[inputs.mem]]

[[inputs.system]]

[[inputs.cpu]]

percpu = false

totalcpu = true

collect_cpu_time = true

report_active = true

### Output

[[outputs.http]]

url = "https://@metricsUsername:@metricsPassword@@metrics_id-vm.logit.io:@vmAgentPort/api/v1/write"

data_format = "prometheusremotewrite"

[outputs.http.headers]

Content-Type = "application/x-protobuf"

Content-Encoding = "snappy"Start Telegraf

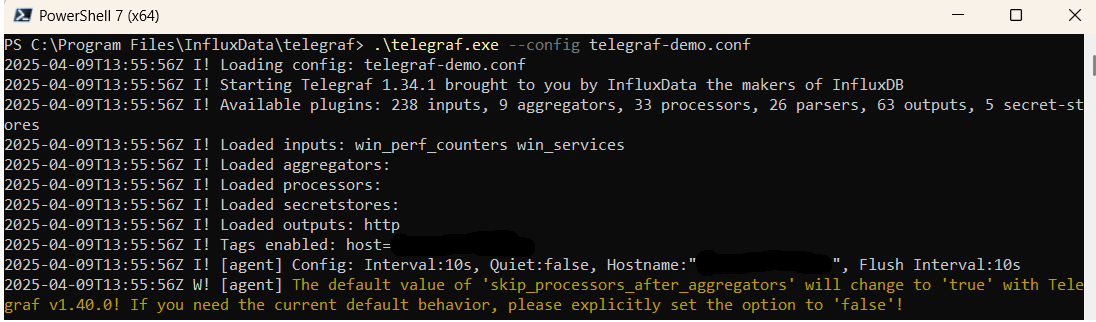

From the location where Telegraf was installed (C:\Program Files\InfluxData\telegraf\telegraf-1.34.1) run the program

providing the chosen configuration file as a parameter:

.\telegraf.exe --config telegraf.confOnce Telegraf is running you should see output similar to the following, which confirms the inputs, output and basic configuration the application has been started with:

Launch Grafana to View Your Data

Launch GrafanaHow to diagnose no data in Stack

If you don't see data appearing in your stack after following this integration, take a look at the troubleshooting guide for steps to diagnose and resolve the problem or contact our support team and we'll be happy to assist.

Telegraf Nginx metrics Overview

To effectively monitor and analyze Nginx metrics in a distributed environment, organizations need a reliable and efficient metrics management solution. Telegraf is an open-source metrics collection agent that can gather Nginx metrics from various sources, including operating Nginx, databases, and other applications.

With a wide range of input plugins, Telegraf allows users to collect metrics from different sources, such as CPU usage, memory usage, network activity, and more. To store and query the collected metrics, organizations can use Prometheus, an open-source monitoring and alerting tool that supports a flexible querying language and graphical visualization of data.

By configuring Telegraf to output metrics in the Prometheus format and using Prometheus to scrape the metrics from the Telegraf server, organizations can ship Nginx metrics from Telegraf to Prometheus. This process involves setting up Telegraf to collect Nginx metrics, outputting them in the Prometheus format, configuring Prometheus to scrape the metrics from the Telegraf server, and visualizing the data using Prometheus' flexible querying and graphical visualization capabilities.

Using Telegraf to ship Nginx metrics to Prometheus is a reliable and efficient metrics management solution for distributed environments. It allows organizations to gain insights into Nginx performance, optimize their distributed Nginx, and troubleshoot any issues that arise.

If you need any further assistance with shipping your log data to Logit.io we're here to help you get started. Feel free to get in contact with our support team by sending us a message via live chat & we'll be happy to assist.