PostgreSQL

Collect and ship PostgreSQL logs to Logstash and Elasticsearch

Follow the steps below to send your observability data to Logit.io

Logs

Filebeat is a lightweight shipper that enables you to send your PostgreSQL application logs to Logstash and Elasticsearch. Configure Filebeat using the pre-defined examples below to start sending and analysing your PostgreSQL application logs.

Install Integration

Install Filebeat

To get started you will need to install filebeat. To do this you have two main options:

- Choose the filebeat (opens in a new tab) ZIP file (Windows ZIP x86_64) or

- Choose the Microsoft Software Installer MSI (opens in a new tab) file (Windows MSI x86_64 (beta))

To successfully install filebeat and set up the required Windows service you will need to have administrator access.

If you have chosen to download the zip file:

- Extract the contents of the zip file into C:\Program Files.

- Rename the extracted folder to filebeat

- Open a PowerShell prompt as an Administrator (right-click the PowerShell icon and select Run As Administrator).

- From the PowerShell prompt, run the following commands to install filebeat as a Windows service:

cd 'C:\Program Files\filebeat'.\install-service-filebeat.ps1If script execution is disabled on your system, you need to set the execution policy for the current session to allow the script to run. For example:

PowerShell.exe -ExecutionPolicy UnRestricted -File .\install-service-filebeat.ps1For more information about Powershell execution policies see here (opens in a new tab).

If you have chosen to download the filebeat.msi file:

- double-click on it and the relevant files will be downloaded.

At the end of the installation process you'll be given the option to open the folder where filebeat has been installed.

- Open a PowerShell prompt as an Administrator (right-click the PowerShell icon and select Run As Administrator).

- From the PowerShell prompt, change directory to the location where filebeat was installed and run the following command to install filebeat as a Windows service:

.\install-service-filebeat.ps1If script execution is disabled on your system, you need to set the execution policy for the current session to allow the script to run. For example:

PowerShell.exe -ExecutionPolicy UnRestricted -File .\install-service-filebeat.ps1For more information about Powershell execution policies see here (opens in a new tab).

The default configuration file is located at:

C:\Program Files\filebeat\filebeat.yml

Enable the PostgreSQL module

There are several built in filebeat modules you can use. You will need to enable the postgresql module:

.\filebeat.exe modules list

.\filebeat.exe modules enable postgresqlIn the module config under modules.d, change the module settings to match your environment. You must enable at least one fileset in the module.

Filesets are disabled by default.

Copy the snippet below and replace the contents of the postgresql.yml module file:

# Module: postgresql

# Docs: https://www.elastic.co/guide/en/beats/filebeat/8.12/filebeat-module-postgresql.html

- module: postgresql

# All logs

log:

enabled: true

# Set custom paths for the log files. If left empty,

# Filebeat will choose the paths depending on your OS.

#var.paths:Update Your Configuration File

The configuration file below is pre-configured to send data to your Logit.io Stack via Logstash.

Copy the configuration file below and overwrite the contents of filebeat.yml.

# ============================== Filebeat modules ==============================

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

#reload.period: 10s

# ================================== Outputs ===================================

# ------------------------------ Logstash Output -------------------------------

output.logstash:

hosts: ["@logstash.host:@logstash.sslPort"]

loadbalance: true

ssl.enabled: true

# ================================= Processors =================================

processors:

- add_host_metadata:

when.not.contains.tags: forwarded

- add_cloud_metadata: ~

- add_docker_metadata: ~

- add_kubernetes_metadata: ~If you're running Filebeat 7 add this code block to the end. Otherwise, you can leave it out.

# ... For Filebeat 7 only ...

filebeat.registry.path: /var/lib/filebeatIf you're running Filebeat 6 add this code block to the end. Otherwise, you can leave it out.

# ... For Filebeat 6 only ...

registry_file: /var/lib/filebeat/registryValidate your YAML

It's a good idea to run the configuration file through a YAML validator to rule out indentation errors, clean up extra characters, and check if your YAML file is valid. Yamllint.com (opens in a new tab) is a great choice.

Validate configuration

.\@beatname.exe test config -c @beatname.ymlIf the yml file is invalid, @beatname will print a description of the error. For example, if the

output.logstash section was missing, @beatname would print no outputs are defined, please define one under the output section

Start filebeat

To start Filebeat, run in Powershell:

Start-Service filebeatLaunch OpenSearch Dashboards to View Your Data

Launch OpenSearch DashboardsHow to diagnose no data in Stack

If you don't see data appearing in your stack after following this integration, take a look at the troubleshooting guide for steps to diagnose and resolve the problem or contact our support team and we'll be happy to assist.

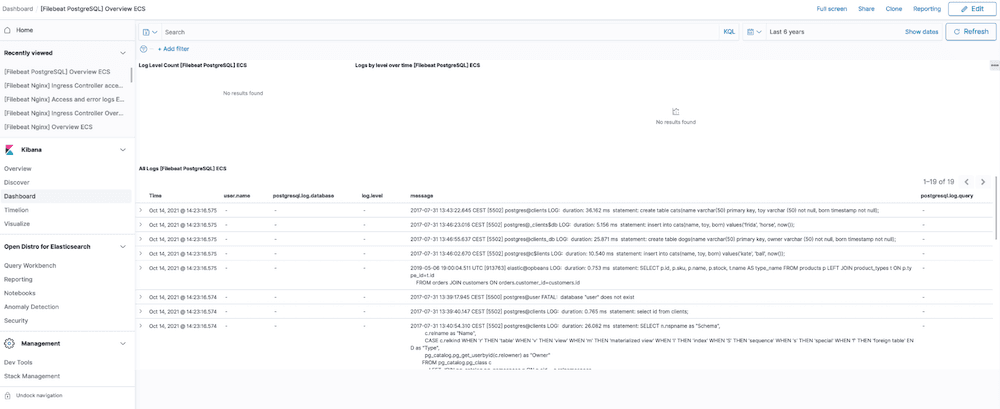

PostgreSQL Dashboard

The PostgreSQL module comes with predefined Kibana dashboards. To view your dashboards for any of your Logit.io stacks, launch Logs and choose Dashboards.

Metrics

Configure Telegraf to ship PostgreSQL metrics to your Logit.io stacks.

Install Integration

Install Telegraf

This integration allows you to configure a Telegraf agent to send your metrics to Logit.io.

Choose the installation method for your operating system:

When you paste the command below into Powershell it will download the Telegraf zip file.

Once that is complete, press Enter again and the zip file will be extracted into C:\Program Files\InfluxData\telegraf\telegraf-1.34.1.

wget https://dl.influxdata.com/telegraf/releases/telegraf-1.34.1_windows_amd64.zip -UseBasicParsing -OutFile telegraf-1.34.1_windows_amd64.zip

Expand-Archive .\telegraf-1.34.1_windows_amd64.zip -DestinationPath 'C:\Program Files\InfluxData\telegraf'or in Powershell 7 use:

# Download the Telegraf ZIP file

Invoke-WebRequest -Uri "https://dl.influxdata.com/telegraf/releases/telegraf-1.34.1_windows_amd64.zip" `

-OutFile "telegraf-1.34.1_windows_amd64.zip" `

-UseBasicParsing

# Extract the contents of the ZIP file

Expand-Archive -Path ".\telegraf-1.34.1_windows_amd64.zip" `

-DestinationPath "C:\Program Files\InfluxData\telegraf"The default configuration file is location at:

C:\Program Files\InfluxData\telegraf\telegraf.conf

Configure the Telegraf input plugin

The configuration file below is pre-configured to scrape the system metrics from your hosts, add the following code to the configuration file telegraf.conf from the previous step.

# Read metrics from one or many postgresql servers

[[inputs.postgresql]]

## Specify address via a url matching:

## postgres://[pqgotest[:password]]@localhost[/dbname]?sslmode=[disable|verify-ca|verify-full]&statement_timeout=...

## or a simple string:

## host=localhost user=pqgotest password=... sslmode=... dbname=app_production

## Users can pass the path to the socket as the host value to use a socket

## connection (e.g. `/var/run/postgresql`).

##

## All connection parameters are optional.

##

## Without the dbname parameter, the driver will default to a database

## with the same name as the user. This dbname is just for instantiating a

## connection with the server and doesn't restrict the databases we are trying

## to grab metrics for.

##

address = "host=localhost user=postgres sslmode=disable"

## A custom name for the database that will be used as the "server" tag in the

## measurement output. If not specified, a default one generated from

## the connection address is used.

# outputaddress = "db01"

## connection configuration.

## maxlifetime - specify the maximum lifetime of a connection.

## default is forever (0s)

##

## Note that this does not interrupt queries, the lifetime will not be enforced

## whilst a query is running

# max_lifetime = "0s"

## A list of databases to explicitly ignore. If not specified, metrics for all

## databases are gathered. Do NOT use with the 'databases' option.

# ignored_databases = ["postgres", "template0", "template1"]

## A list of databases to pull metrics about. If not specified, metrics for all

## databases are gathered. Do NOT use with the 'ignored_databases' option.

# databases = ["app_production", "testing"]

## Whether to use prepared statements when connecting to the database.

## This should be set to false when connecting through a PgBouncer instance

## with pool_mode set to transaction.

prepared_statements = true

### System metrics

[[inputs.disk]]

[[inputs.net]]

[[inputs.mem]]

[[inputs.system]]

[[inputs.cpu]]

percpu = false

totalcpu = true

collect_cpu_time = true

report_active = true

### Output

[[outputs.http]]

url = "https://@metricsUsername:@metricsPassword@@metrics_id-vm.logit.io:@vmAgentPort/api/v1/write"

data_format = "prometheusremotewrite"

[outputs.http.headers]

Content-Type = "application/x-protobuf"

Content-Encoding = "snappy"Read more about how to configure data scraping and configuration options for PostgreSQL (opens in a new tab)

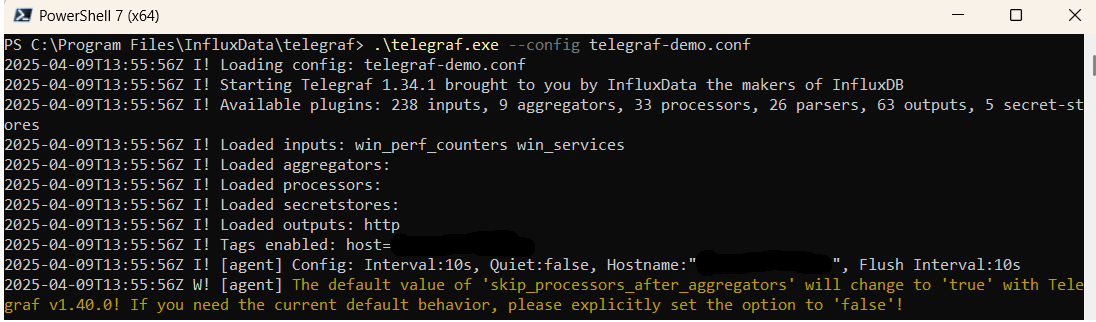

Start Telegraf

From the location where Telegraf was installed (C:\Program Files\InfluxData\telegraf\telegraf-1.34.1) run the program

providing the chosen configuration file as a parameter:

.\telegraf.exe --config telegraf.confOnce Telegraf is running you should see output similar to the following, which confirms the inputs, output and basic configuration the application has been started with:

Launch Grafana to View Your Data

Launch GrafanaHow to diagnose no data in Stack

If you don't see data appearing in your stack after following this integration, take a look at the troubleshooting guide for steps to diagnose and resolve the problem or contact our support team and we'll be happy to assist.

PostgreSQL Logging Overview

PostgreSQL (often shortened to Postgres) is a highly stable open-source relational database that supports both relational & non-relational querying. Postgres can run across the majority of operating systems including Linux, Windows and macOS.

PostgreSQL is used by some of the world's best known brands including Apple, IMDB, Red Hat & Cisco due to its robust feature set, useful addons & scalability.

Some of the benefits of using this database include their support for the majority of programming languages as well as it's strengths as a reliable transactional database for companies of all sizes.

PostgreSQL users are encouraged to log as much as possible as with insufficient configuration you could easily lose access to key messages for troubleshooting and error resolution. Below are some of the most important logs you'll likely need to analyse when running Postgres.

PostgreSQL transaction logs help the user to identify what queries a transaction encountered.

Remote Host IP/Name (w/ port) logs can serve to help security technicians identify suspicious activity that has occurred. If you are looking to pinpoint troublesome sessions affecting your infrastructure you might turn to Process ID logs for further insights.

When it comes to logging in Postgres there are twenty three other parameters which can be isolated for troubleshooting using the various keywords; ERROR, FATAL, WARNING, & PANIC.

With all these logs, directories & parameters it is easy to become overwhelmed at the prospect of having to thoroughly analyse your log data & you may wish to use a log management system (opens in a new tab) to streamline your processes.

Our built in PostgreSQL log file analyser helps DBAs, sysadmins, and developers identify issues, create visualisations & set alerts when preconfigured and custom parameters are met.

If you need any assistance with analysing your PostgreSQL logs we're here to help. Feel free to reach out by contacting the Logit.io support team via live chat & we'll be happy to help you start analysing your data.