Interview

9 min read

Last updated:

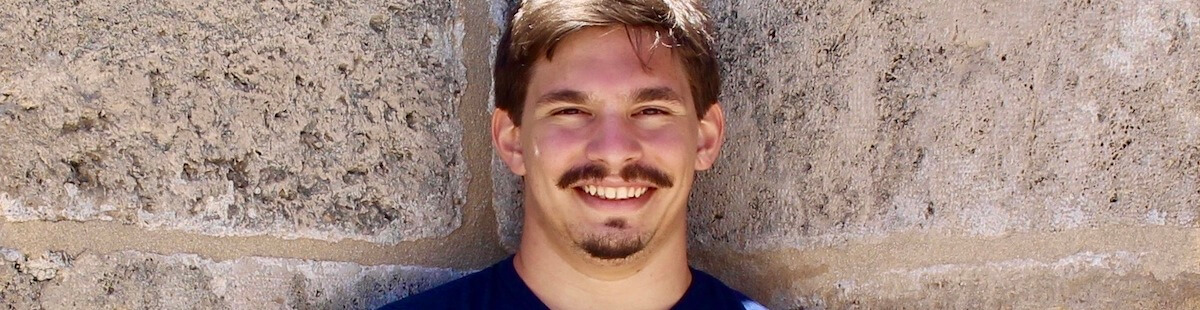

In the latest instalment of our interviews speaking to leaders throughout the world of tech, we’ve welcomed Semih Cantürk. Semih is a Machine Learning Engineer at Zetane Systems and an MSc & incoming PhD student at the University of Montréal and MILA Institute.

At Zetane, he’s responsible for the development and integration of explainable AI algorithms in addition to leading various project work. Previously, he worked on building a machine learning module that predicts motor tasks based on brain activity from fMRI data for his undergraduate thesis (which won the Penn Engineering Societal Impact Award) and co-authored an article on predicting the efficacy of existing antiviral drugs on SARS-CoV-2 (COVID-19) using machine learning predictions.

Tell us about the business you represent, what is their vision & goals?

At Zetane, we’re aiming to make explainable AI mainstream. Deep learning (DL) models are difficult to interpret, which is not usually an issue when your model works well, but is a problem when it doesn’t since you have no idea why it doesn’t work.

Our solutions aim to (a) show what’s happening in your DL model (via our software, the Zetane Engine), and (b) detect situations where your model fails (via our Data testing and analysis SaaS services). This in turn enables building both more powerful and safer DL pipelines for our customers.

Can you share a little bit about yourself and how you got into the field of artificial intelligence?

I’m Semih Cantürk, AI Engineer at Zetane Systems and MSc student at the MILA AI Institute & Université de Montréal. I’m from Istanbul, Turkey, and have been working at Zetane for 2 years now.

When I first started college, I really had no idea what I wanted to do career-wise -- all I knew was that I liked math. I enrolled in systems engineering at the University of Pennsylvania mostly because the department allowed a wide range of courses from various fields. It was sort of a build-your-own thing where they throw a bunch of statistics, optimization & other applied math on you and you’re free to apply that anywhere.

Some of my peers were into electrical engineering, some were into finance, others were into biological/chemical systems. I tried my hand at econ/finance at first, got bored out of my mind, reoriented myself to electrical engineering and didn’t end up liking that too much either.

I hadn’t even considered computer science at first because so many people around me had been coding since high school and I hadn’t written a single line of code until my mandatory Computer Science intro class freshmen year. But then slowly it grew on me and I ended up reorienting (again) to Computer Science. Eventually, in my junior year, I ended up taking an intro Machine Learning class that hit the right spot, which was apparently at the intersection of math and computer science.

I took on an fMRI Machine Learning module project as my graduation thesis and ended up doing another year of Machine Learning research after graduation, which ended up covering up for my lack of Machine Learning experience prior to junior year.

Can you tell us a bit more about how you were able to build a machine learning module that predicts motor tasks based on brain activity from fMRI data?

This was initially an idea proposed to me by Dr Victor Preciado at Penn, who saw that I was interested in pursuing Machine Learning in further depth after taking his class. I needed some practical experience, and this was the perfect opportunity, in fact. One issue was that neither I nor the two other members of my team had any experience in neuroscience, so we teamed up with a PhD student from Dr Preciado’s lab to help us with that side of things.

The project was a success (we ended up winning the departmental Societal Impact Award), and after graduation, my professor offered me a part-time position to pursue this project further.

The idea is in fact relatively straightforward -- the fMRI data consists of signal readings from 60K points in the brain cortical surface in regular intervals while the patient was performing several tasks. It’s quite in the same vein as the visualizations where certain regions of the brain light up when performing certain activities.

If you can match the points that light up to tasks performed, then voila! A big part of the challenge however was to do this in real-time; we were trying to detect the tasks within 2 seconds of it happening, and preferably instantaneously, since one can detect the signal onset before the task actually takes place, and the theoretical use case was that by integrating such a solution into a brain-computer interface, disabled patients could control their artificial limbs.

What does your day to day responsibilities look like at your organisation?

My time is usually split between product development & project work. Product development is us improving our own software, adding features, fixing bugs etc. There is a whole pipeline to this obviously, but essentially we regularly decide on new things to implement or fix, and then split these tasks among us.

On the other side, project work is us developing solutions for corporate customers using the Zetane software. On both sides, I usually work on determining and implementing the best approaches to take in terms of both machine learning pipelines or components and explainability methods.

What are some misconceptions that you believe the average person has about AI?

There is a lot of talk regarding artificial general intelligence (AGI), which is the term used to refer to the type of AI that can either match or outperform humans in pretty much any intellectual task. This is the kind of AI that the sci-fi writers know and love, you know, the type that tends to take over the world and would bring the end of humanity (unless our protagonist interferes). Now, my worry here is that when worrying about AI safety, and specifically AGI posing a threat to humanity, we’re framing the problem wrong.

This is a very tangled problem admittedly, and when attempting to determine the threat of AGI to humanity, we need to untangle it a bit. First question: Is AGI possible to begin with? Is it actually viable to build such an AI in the, say, next 50 years? The expert opinions of this diverge strongly, but for the sake of argument, let’s assume we will have the technical expertise and knowledge to build AGI. Next question: If AGI is possible, will it be a friend or foe? Now, this is where it gets fishy, because it assumes we will actually reach AGI without destroying ourselves first -- and that is a very bold statement, dare I say.

What we seem to forget is that AGI is not a prerequisite for the demise of humanity. All it takes is a sufficiently powerful AI in the wrong hands, may that be wrong out of malice or incompetence. It is much more likely that we’ll reach a form of AI that is not “general” yet but is smart enough to perform a selection of lethal tasks autonomously with a great agency. It probably won’t be able to beat you in chess, but it may be able to disarm complex security systems, shut off electrical grids, poison large water supply networks etc.

In an ideal world, all AI development should be carried under strict AI safety standards (which we have yet to come up with as the AI community, but at least it’s a hot topic) in order to prevent the release of any sort of unsafe AI into the wild to prevent such things, yet the driving forces behind most AI development will be willing to forgo such standards (or make exceptions for it even) for the sake of political or economic incentives. What happens when they lose control of such an AI?

In short, we’re thinking too far ahead when we’re worrying about AGI; unless we come up with robust ways to promote safe AI development, we will never get there to begin with.

What advice would you give to someone wishing to start their career in artificial intelligence?

Invest in your math education in college and afterwards. Having a solid foundation of math and statistics goes a long way in a career in AI -- people who are able to understand the mathematical foundations of novel concepts in AI/Machine Learning are much harder to come by than people who are proficient in Machine Learning frameworks.

Are there any books, blogs, or any other resources that you highly recommend on the subject of AI?

Lil’Log: OpenAI research scientist Lilian Weng’s personal blog. She usually blogs about the mathematical foundations of AI tools, tends to pick a specific topic at a time and goes really in-depth. Highly recommended for the more mathematically inclined.

Jay Alammar: ML visualization expert Jay Alammar’s (currently at cohere.ai) personal blog. He mostly focuses on language models and walks you through how they work in a visual manner. The posts are a joy to read even if NLP is not your area.

Otoro: Google Brain research scientist David Ha’s personal blog. Ha is a well-known figure especially in the field of reinforcement learning, and his blog is a great mashup of his work in RL and his interest in design -- both technical and visual-heavy.

Distill: I leave Distill to the last because pretty much everyone who’s involved in AI has at least heard of it at some point. Led by Chris Olah (AnthropicAI, previously OpenAI and Google Brain), it’s probably the most comprehensive and visual AI blog there is. Unfortunately, they’re on hiatus right now, but the site is still well maintained and the amount of content and work put into it is mind-boggling.

If you enjoyed this article then why not check out our previous guide on everything you need to know about Java developer tools or our guide on what is BABOK?