Resources

11 min read

Last updated:

Contents

What is MLOps?

MLOps stands for Machine Learning Operations. MLOps refers to the set of practices and tools that facilitate the end-to-end lifecycle management of machine learning models, from development and training to deployment and monitoring.

The primary objective of MLOps tools is to address the unique challenges associated with deploying and managing machine learning models in real-world scenarios. By providing automated solutions to specific challenges, MLOps tools enable data scientists and machine learning engineers to focus more on building robust models, rather than spending excessive time on infrastructure and operational concerns.

Why Is MLOps Useful?

As stated previously, MLOps is often essential as these practices address the challenges and complexities that arise when deploying and managing machine learning models in real-world production environments. To deeply understand this, below are some key reasons as to why MLOps is needed:

Efficient Model Deployment: Similar to that in DevOps. MLOps streamlines the process of deploying machine learning models into production. Through automating tasks, it reduces the time and effort required to put models into operation.

Collaboration and Knowledge Sharing: MLOps encourages collaboration among data scientists, developers, and operations teams by combining their expertise in building more efficient, fast, and scalable machine learning models that leverage all fields.

Scalability: MLOps enables organizations scalability and management as thousands of models can be overseen, controlled, managed, and monitored for continuous integration, continuous delivery, and continuous deployment. Specifically, MLOps provides reproducibility of ML pipelines, enabling more tightly-coupled collaboration across data teams, reducing conflict with DevOps and IT, and accelerating release velocity.

Why and When to Employ MLOps

To understand when to employ MLOps, it’s important to acknowledge some of the challenges that you may incur and therefore how MLOps can help you overcome them.

Issues with monitoring: Evaluating machine learning model health manually is very time-consuming. MLOps enables the establishment of monitoring and alerting systems to track model performance in production. Which allows for proactive identification of issues and timely model retraining or updates.

Issues with model governance and compliance: If your machine learning projects involve sensitive data or must adhere to specific regulatory requirements, MLOps practices help ensure model governance, compliance, and security. MLOps model governance can help you with production access control, traceable model results, model audit trails, and model upgrade approval workflows.

Issues with model deployment: Sometimes if the models are deployed, it’s not at the speed or scale to meet the needs of the business. MLOps helps manage the deployment process, monitor model performance, and handle updates or retraining as needed.

Issues with lifecycle management: Often, organizations cannot regularly update models in production because the process is resource intensive. MLOps can help you when the models are not being updated in production.

The Leading MLOps Tools

MLOps tools encompass a wide range of technologies, frameworks, and platforms designed to enhance the collaboration, scalability, reproducibility, and reliability of machine learning projects. These tools offer a unified and integrated approach to managing the entire lifecycle of machine learning models, empowering organizations to build, deploy, monitor, and iterate on models efficiently.

One of the defining characteristics of the MLOps landscape in 2023 is the coexistence of both open-source and closed-source solutions. So, for the rest article, we will outline the best MLOps tools currently on the market to help you make the most appropriate decision for your organization.

MLflow

MLflow is an open-source lifecycle management platform that allows for more customizations than many of its closed-source competitors. This tool also integrates with several other popular MLOps solutions, such as H2O.ai, Amazon SageMaker, Databricks, Google Cloud, Azure Machine Learning, Docker, and Kubernetes.

MLflow is an open-source lifecycle management platform that allows for more customizations than many of its closed-source competitors. This tool also integrates with several other popular MLOps solutions, such as H2O.ai, Amazon SageMaker, Databricks, Google Cloud, Azure Machine Learning, Docker, and Kubernetes.

Features:

- MLflow tracking to record and query experiments

- MLflow projects to package data science sources for reproducibility.

- MLflow Model Registry is a central model store that provides versioning, stage transitions, annotations, and managing machine learning models.

- MLflow models to deploy models in varied serving environments

AWS SageMaker

Amazon Web Services SageMaker is a one-stop solution for MLOps. You can train and accelerate model development, track and version experiments, catalog ML artifacts, integrate CI/CD ML pipelines, and deploy, serve, and monitor models in production seamlessly.

Amazon Web Services SageMaker is a one-stop solution for MLOps. You can train and accelerate model development, track and version experiments, catalog ML artifacts, integrate CI/CD ML pipelines, and deploy, serve, and monitor models in production seamlessly.

Features:

- CI/CD through source and version control, automated testing, and end-to-end automation.

- Automate ML training workflows.

- Continuous monitoring and retaining models to maintain quality.

- Security features for policy management and enforcement, infrastructure security, data protection, authorization, authentication, and monitoring.

Comet ML

Comet ML is a platform for tracking, comparing, explaining, and optimizing machine learning models and experiments. You can use it with any machine learning library, such as Scikit-learn, Pytorch, TensorFlow, and HuggingFace.

Comet ML is a platform for tracking, comparing, explaining, and optimizing machine learning models and experiments. You can use it with any machine learning library, such as Scikit-learn, Pytorch, TensorFlow, and HuggingFace.

Features:

- Tracking of hyperparameters, metrics, code, and output and it’s easy to share these results or outcomes with the rest of the team.

- Comet ML is enabled to track the packages as well as the GPU usage. This tool allows you to view multiple experiments and manage everything from a single location.

- Creates graphs automatically for Machine Learning analysis.

- Auto-logging of Keras, TensorFlow, PyTorch, and more.

H2O MLOps

H2O MLOps is one of many top-tier solutions provided by H2O for machine learning and artificial intelligence tooling. Many MLOps teams select this tool because of the flexibility of testing and deployment environments that work with the platform. Also, the platform has the flexibility to work with cloud, on-premises, and container infrastructures.

H2O MLOps is one of many top-tier solutions provided by H2O for machine learning and artificial intelligence tooling. Many MLOps teams select this tool because of the flexibility of testing and deployment environments that work with the platform. Also, the platform has the flexibility to work with cloud, on-premises, and container infrastructures.

Features:

- H2O MLOps can be easily integrated with other H2O tools such as Driverless AI and Open source.

- A model repository that includes version control, access control, and data logging.

- Significant support for major cloud providers and on-premise Kubernetes distributions.

- Update, troubleshoot, and A/B test models in different environments.

Censius AI

Censius AI offers end-to-end AI observability that delivers automated monitoring and proactive troubleshooting to build reliable models throughout the ML lifecycle. You can set up Censius using Python or Java SDK or REST API and deploy it on-premises or on the cloud.

Censius AI offers end-to-end AI observability that delivers automated monitoring and proactive troubleshooting to build reliable models throughout the ML lifecycle. You can set up Censius using Python or Java SDK or REST API and deploy it on-premises or on the cloud.

Features:

- Native support for A/B test frameworks.

- Data explainability for tabular, image, and textual datasets.

- Customizable dashboards for data, modes, and business metrics.

- Monitor performance degradation, data drift, and data quality.

Valohai

Valohai provides a collaborative environment for managing and automating machine learning projects. With Valohai, you can define pipelines, track changes, and run experiments on cloud resources or your own infrastructure. It simplifies the machine learning workflow and offers features for version control, data management, and scalability.

Valohai provides a collaborative environment for managing and automating machine learning projects. With Valohai, you can define pipelines, track changes, and run experiments on cloud resources or your own infrastructure. It simplifies the machine learning workflow and offers features for version control, data management, and scalability.

Features:

- Good security with features such as Single Sign-On (SSO), Two Factor Authentication (2FA), and Active Directory (AD).

- API-friendly ML pipelines that can automate model retraining.

- Visualization for parallel hyperparameter tuning runs.

- Workflow automation with data fetching, preprocessing, synthetic data generation, and hyperparameter sweeps.

Neptune.ai

Neptune.ai is an ML metadata store that was built for research and production teams that run many experiments. It allows teams to log and visualize experiments, track hyperparameters, metrics, and output files. As well, it provides collaboration features, such as sharing experiments and results, making it easier for teams to work together. Also, It has 20+ integrations with MLOps tools and libraries.

Neptune.ai is an ML metadata store that was built for research and production teams that run many experiments. It allows teams to log and visualize experiments, track hyperparameters, metrics, and output files. As well, it provides collaboration features, such as sharing experiments and results, making it easier for teams to work together. Also, It has 20+ integrations with MLOps tools and libraries.

Features:

- Version history and data comparisons through table visualizations.

- ML data store for model building metadata management.

- Database dashboard with data visualizations.

- Model registry for version history and easy search.

Prefect

Prefect is an open-source, lightweight tool built for end-to-end machine-learning pipelines. It is a modern data stack for monitoring, coordinating, and orchestrating workflows between and across applications.

Prefect is an open-source, lightweight tool built for end-to-end machine-learning pipelines. It is a modern data stack for monitoring, coordinating, and orchestrating workflows between and across applications.

Features:

- Two options for databases, either Prefect Orion UI or Prefect Cloud.

- Prefect Orion UI is an open-source, locally hosted orchestration engine and API server.

- Prefect Cloud is a hosted service for you to visualize flows, flow runs, and deployments.

- Manage accounts, workspace, and team collaboration.

Metaflow

Metaflow helps data scientists and machine learning engineers build, manage, and deploy data science projects. It provides a high-level API that makes it easy to define and execute data science workflows. It was built for data scientists so they can focus on building models instead of worrying about MLOps engineering.

Metaflow helps data scientists and machine learning engineers build, manage, and deploy data science projects. It provides a high-level API that makes it easy to define and execute data science workflows. It was built for data scientists so they can focus on building models instead of worrying about MLOps engineering.

Features:

- It provides a number of features that help improve the reproducibility and reliability of data science projects.

- Can be integrated with a variety of other tools and services.

- Design workflow, run it on scale, and deploy the model in production.

- API is available for R language.

Kedro

Kedro is a Python library for building modular data science pipelines. You can use it for creating reproducible, maintainable, and modular data science projects. It integrates the concepts from software engineering into machine learning, such as modularity, separation of concerns, and versioning.

Kedro is a Python library for building modular data science pipelines. You can use it for creating reproducible, maintainable, and modular data science projects. It integrates the concepts from software engineering into machine learning, such as modularity, separation of concerns, and versioning.

Features:

- Create, visualize, and run the pipelines.

- Deployment on a single or distributed machine.

- Set up dependencies and configuration.

- Create maintainable data science code.

Giskard

Giskard is a free and open-source testing framework dedicated to Machine Learning models. The tool is a Python library that automatically identifies vulnerabilities in AI models, from tabular models to LLM, such as performance biases, data leakage and spurious correlation.

Giskard is a free and open-source testing framework dedicated to Machine Learning models. The tool is a Python library that automatically identifies vulnerabilities in AI models, from tabular models to LLM, such as performance biases, data leakage and spurious correlation.

Features:

- Drill down on model issues

- Instantly generate test suites for models

- Detects vulnerabilities in AI models

- Integrates seamlessly with popular tools, such as AWS, Kaggle and Github

MindsDB

MindsDB enables you to construct AI using SQL, the solution enhances SQL to streamline the creation of AI tools that require access to realtime data to conduct their tasks. The solution has over 130 data integrations and over 20 AI/ML integrations.

MindsDB enables you to construct AI using SQL, the solution enhances SQL to streamline the creation of AI tools that require access to realtime data to conduct their tasks. The solution has over 130 data integrations and over 20 AI/ML integrations.

Features:

- Abstracts LLMs, time series, regression, and classification models as virtual tables (AI-Tables)

- Design RAG and Semantic Search systems from your database data

- Predict future behaviour

- Recommendation engine to offer usage based in-product suggestions

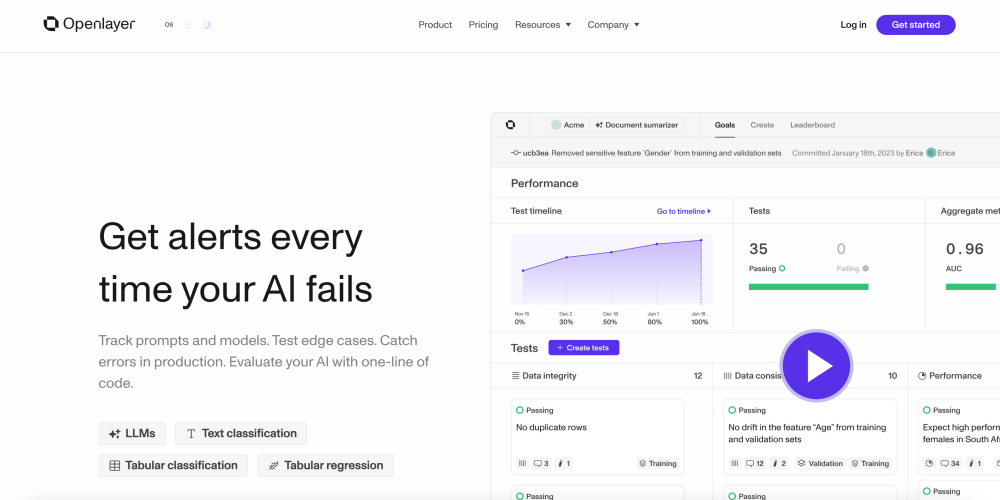

Openlayer

Openlayer MLOps tool is a platform for tracking, testing, and monitoring your AI. To ensure all use cases are covered, they support a variety of task types such as LLMs, text classification, tabular classification, and tabular regression.

Openlayer MLOps tool is a platform for tracking, testing, and monitoring your AI. To ensure all use cases are covered, they support a variety of task types such as LLMs, text classification, tabular classification, and tabular regression.

Features:

- Monitor with real-time alerts (email, Slack, or Openlayer app)

- Upload models and datasets straight from training notebooks or pipelines

- SOC 2 Type 2 compliant platform

- Commit-style versioning system

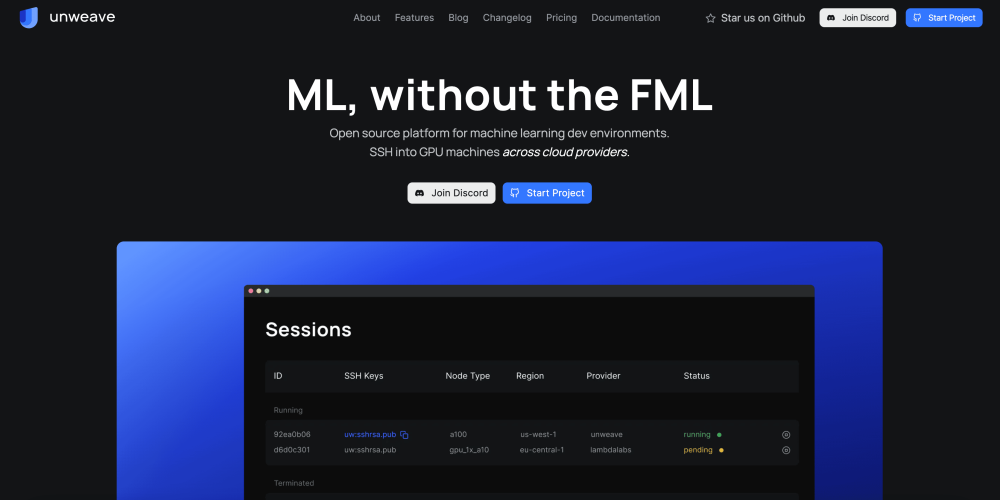

Unweave

Unweave is a free and open-source platform for machine learning dev environments. The solution will soon be able to automatically version your code, data, and artificats with Git, with inbuilt CI/CD integrations. As well as allowing you to Start ML experiments directly from your Git repo, Unweave will automatically clone your code and data.

Unweave is a free and open-source platform for machine learning dev environments. The solution will soon be able to automatically version your code, data, and artificats with Git, with inbuilt CI/CD integrations. As well as allowing you to Start ML experiments directly from your Git repo, Unweave will automatically clone your code and data.

Features:

- Real-time view of projects and sessions

- Unweave sets up and configures your cloud dev environment for you

- Can be run from your terminal and CI/CD pipelines

- Built on top of SSH and Git

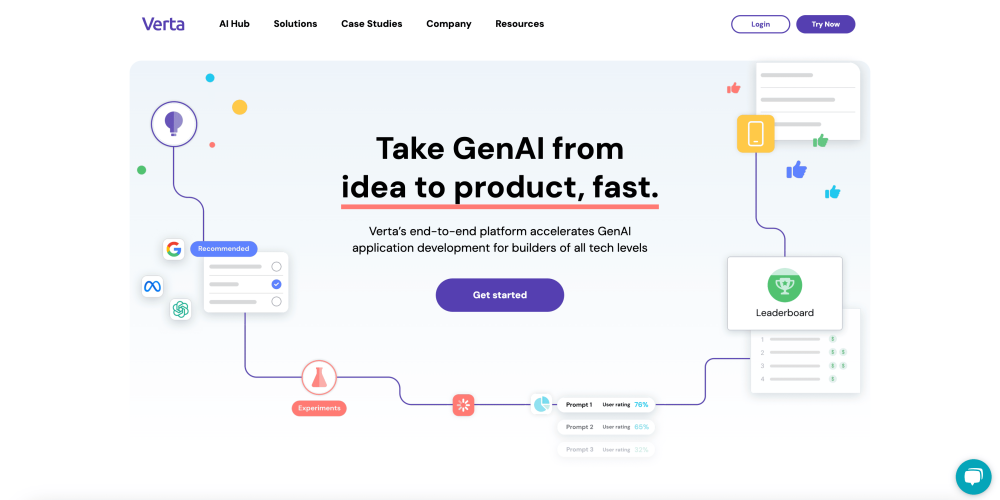

Verta

Verta's end-to-end platform aims to accelerate GenAI application development for builders of all tech levels. Utilizing Verta's Starter Kits enable you to start testing numerous models and prompts right away, then the results are automatically generated and ready to be evaluated.

Verta's end-to-end platform aims to accelerate GenAI application development for builders of all tech levels. Utilizing Verta's Starter Kits enable you to start testing numerous models and prompts right away, then the results are automatically generated and ready to be evaluated.

Features:

- Automated testing and AI-powered prompt and refinement suggestions

- Empowers builders of all tech levels to achieve high-quality model outputs quickly

- Easily compare and share results

- User feedback is automatically collected post delpoyment

Neuton TinyML

With Neuton TinyML you can automatically build small models without coding and embed them into any microcontrollers and sensors. Less than 1kb is an average model size achieved by their neural network.

With Neuton TinyML you can automatically build small models without coding and embed them into any microcontrollers and sensors. Less than 1kb is an average model size achieved by their neural network.

Features:

- No-code Tiny AutoML platform

- Run inferences without turning to the cloud

- Unique patented global optimisation algorithm

- No manual search for neural network parameters

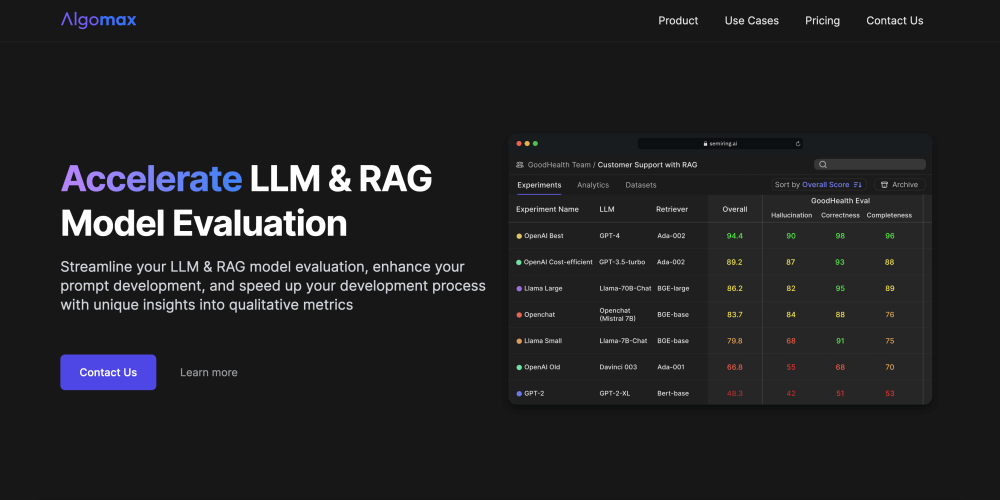

Algomax

Algomax is an MLOps tools dessigned to accelerate LLM and RAG model evaluation. Optimize your prompt development, and quicken your development process with unique insights into qualitative metrics. Pricing is not advertised on the Algomax website, to find information regarding this you will need to contact Algomax directly.

Algomax is an MLOps tools dessigned to accelerate LLM and RAG model evaluation. Optimize your prompt development, and quicken your development process with unique insights into qualitative metrics. Pricing is not advertised on the Algomax website, to find information regarding this you will need to contact Algomax directly.

Features:

- LLM-based engine tailored to capture the nuances of LLM outputs

- Attain specific guidance for enhancing your models capabillities

- Organised dashboard to keep all your experiment data in one accessible place

- Rapid model evaluation

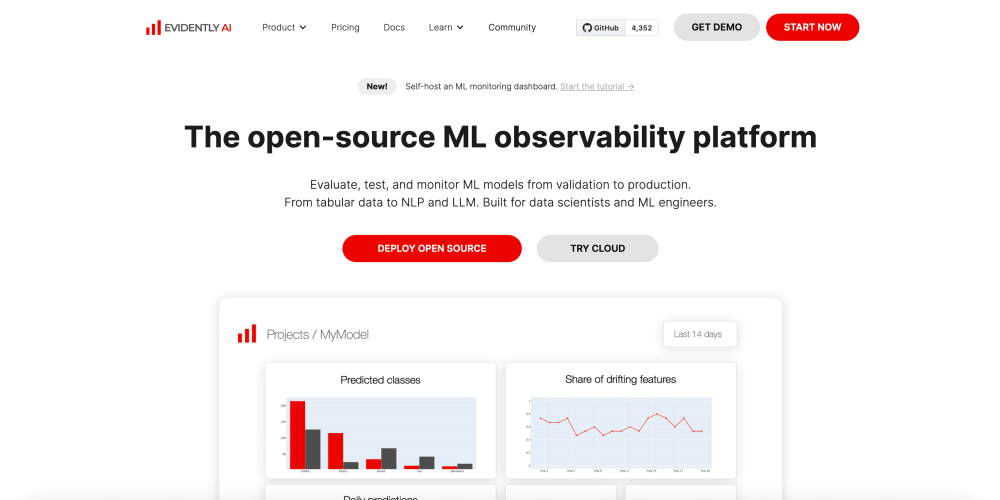

Evidently AI

Evidently AI is an open-source ML model monitoring system. It helps analyze machine learning models during development, validation, or production monitoring. The tool generates interactive reports from Pandas DataFrame.

Evidently AI is an open-source ML model monitoring system. It helps analyze machine learning models during development, validation, or production monitoring. The tool generates interactive reports from Pandas DataFrame.

Features:

- Evidently AI has three main elements

- Batch model checks for performing structured data and model quality checks.

- Interactive dashboards that include reports for, interactive data drift, model performance, and target virtualization.

- Real-time monitoring that monitors data and model metrics from deployed ML service.

In summary, these MLOps tools bring several positives to the field of machine learning operations. Whilst these tools are similar, each has its own set of core features, which have been outlined, that differentiate them from the competition. Therefore we hope that this article will have hopefully aided your decision as to which MLOps tool is most suitable for your organization.

If you found this article informative then why not read our infrastructure monitoring tools comparison or view the top New Relic alternatives next?